KubeStory #002: The Kubernetes Gateway API's story will be game-changing

A glance at what will be, undoubtedly, the future of Service Networking for Kubernetes

This story will not narrate a customer experience. Instead, we are trying to glance at what will be, undoubtedly, the future of Service Networking for Kubernetes.

Hosting pods that require internal or external exposure involves interaction with the Kubernetes Service Networking components.

However, there is no universal way of interacting with these components.

Whether you are exposing your workloads within the cluster or exposing it to external users from the internet, the methods, objects, and configurations used will be different.

You have to consider the client network, the protocol, and the application regionality. Then, after that, you need to think if this may be a use case for client-side Ingress, Service-mesh, or other solutions.

How do you expose your services in this heavens of possibilities?

It is challenging to make the right decision during or before implementation. As the hosted pods requirement evolves, so does the need for another way to expose them.

You could start with the Service resource. You are most likely to encounter the three services types: ClusterIP, NodePort, and Load Balancer. Each of these propositions is fantastic regarding the problem you solve.

However, this story focuses on fine-grained HTTP load balancing.

In this scenario, The Ingress API has represented the interface to expose your workload to external users via HTTP traffic for a long time.

The Ingres API defines the current standard for HTTP traffic.

Ingress API objects apply and update the setup for the underlying Load-Balancer. It brings user-friendly control over HTTP traffic matching, traffic routing, and load-balancing. Every operation done on the Load-Balancer via the configuration of an Ingress API object could be done manually but would require a vast and generally useless effort.

The Ingress API is just a specification that requires implementation. The two main components of this specification are the Ingress Controllers and the Routing rules.

There is no built-in Controller for the Ingress API object. The community has been building many implementations to answer the evolving needs over the years.

The existing model can't keep up with the constantly evolving solutions.

Cloud providers invest much time, money, and effort in making their infrastructure more robust and flexible to answer broader possibilities.

Today's Load Balancer implementations can support traffic splitting, mirroring, or deep routing strategies.

However, the Ingres and Service resources are too simple to support the evolution’s advanced use case. The result was the design of many annotations that made consistency, testing, and user experience complex.

Most of the time, these annotations are not portable and can be painful with multiple platforms.

The Gateway API is the next standard.

If we observe the new Gateway API from the value proposition level, we are replacing a specification with another one. However, the purpose of these changes is to bring more flexibility, portability, and reusability, in addition to advanced features.

Like with every Kubernetes specification, every implementation will have to implement the specified core capabilities, and every extended capacity will need to be portable if implemented. However, Gateway implementations will be authorized to add custom capabilities that can be non-portable.

The Gateway API is a collection of resources.

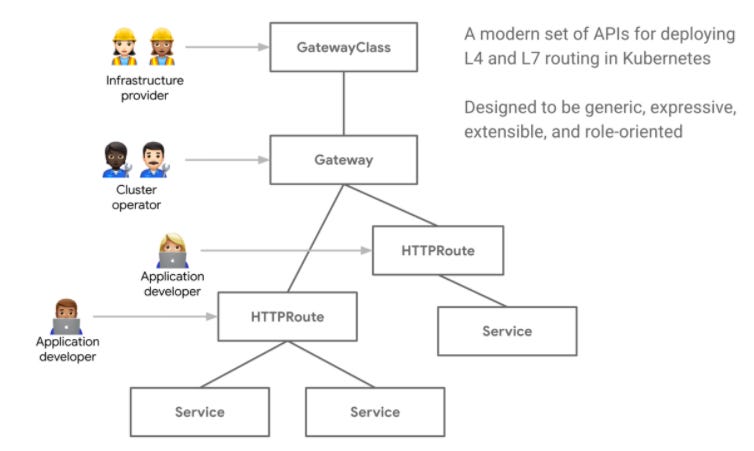

As you can see in the following diagram, multiple resources are involved in the design of the Gateway API.

GatewayClass: It is a template for load balancing implementations. These classes make it easy and explicit for users to understand the capabilities available via the Kubernetes resource model.

For example, every cloud provider will be offering classes relative to their Load Balancer offering, like the GKE Gateway Controller. You will also have vendor implementation like Traefik, Istio, Gloo Edge, or Contour.

Most of the time, users are not going to implement a GatewayClass. However, they will have to reference it in the Gateway Configuration.

Gateway: The behavior of a Gateway is the derivative of the GatwayClass implementation. The Gateway describes the traffic flow between the Load Balancer and targeted Services.

In addition to the GatewayClass it configures, the Gateway contains a listener that resolves a Route resource for a specific Port/Protocol association.

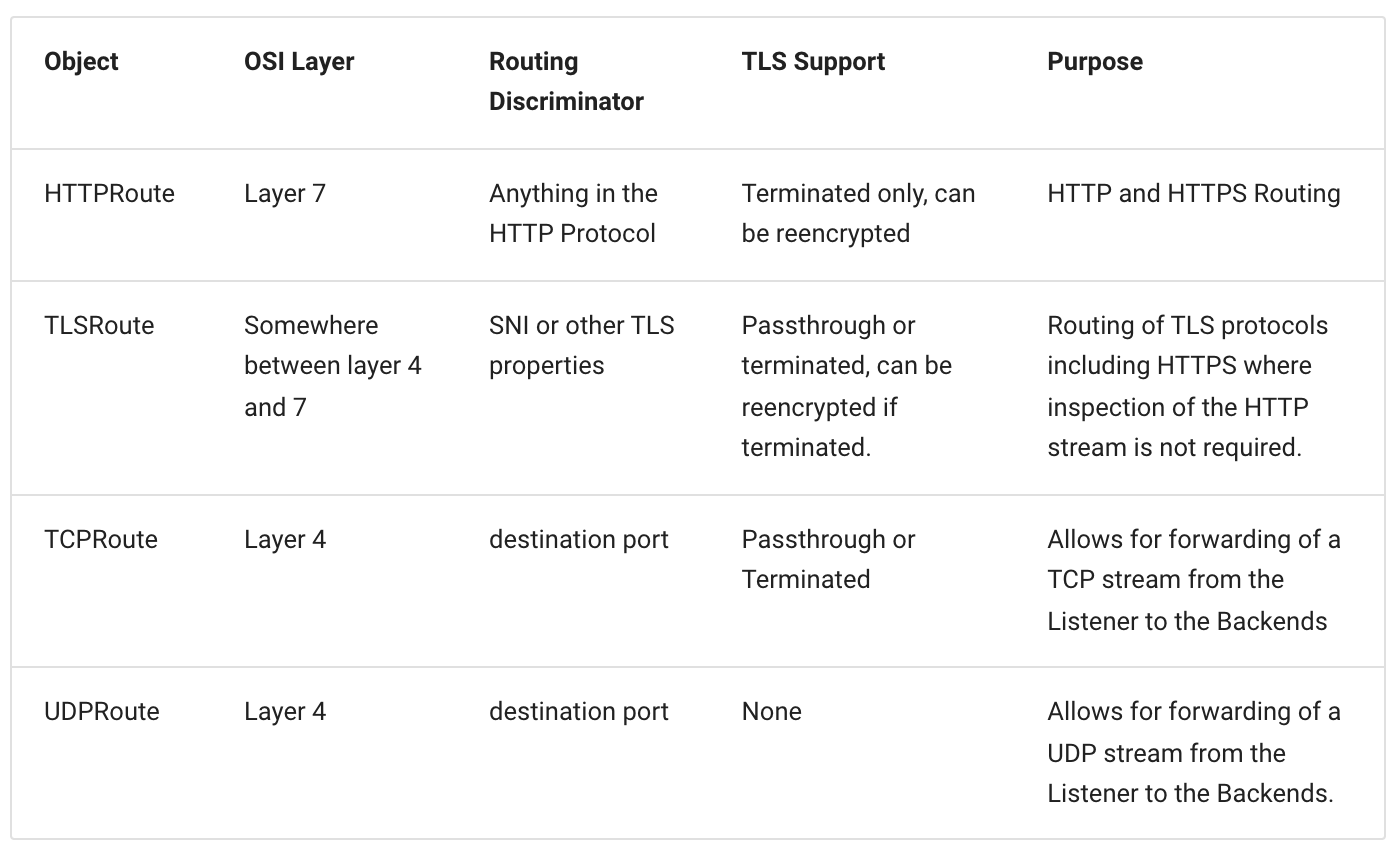

Route: Route resources define protocol-specific rules for mapping requests from a Gateway to Kubernetes Services.

The Gateway API defines a set of usable route types that you can use and allow you to build custom type.

You can find in the following table the list of available route types.

They may add more types to the specification in the future.

The SIG website offers an exciting round-up of the relationships between the Gateway and the Route:

One-to-one - A Gateway and Route may be deployed and used by a single owner and have a one-to-one relationship.

One-to-many - A Gateway can have many Routes bound to it that are owned by different teams from across different Namespaces.

Many-to-one - Routes can also be bound to more than one Gateway, allowing a single Route to control application exposure simultaneously across different IPs, load balancers, or networks.

This repository contains recipes built by the Google Team for the GKE Gateway Controller if you want to see examples.

A role-oriented API-oriented design.

The decomposition of multiple components allows firm boundaries regarding responsibility and access management.

You can use RBAC associated with the specification's roles to restrict authorizations.

Developers will then create their own Rules object regarding forwarding but cannot decide the Load Balancer configuration (implemented in the Gateway object).

This separation is a huge step forward compared to the Ingress Object. Furthermore, it allows a strong separation of concerns during the implementation phase.

I strongly recommend reading the SIG website to understand the implication of this role-oriented API as it is one of the most important additions to this new API.

I have already seen customers’ implementation with the Rule and Gateway written in different folders to emphasize this separation. You can also use Policies (Kyverno, OPA, or others) to restrict certain GatewayClass or Rules.

Already a lot of added value.

The Gateway API is not even GA that multiple advanced use cases are already available, for example:

HTTP header-based matching

HTTP header manipulation

Weighted traffic splitting

Traffic mirroring

Most importantly, the specification gives enough space for further improvements. In this video, a GKE Product Manager from Google Cloud mentions:

Arbitrary CRDs references (bucket, function, and others)

Routing for other protocols

Custom parameters or configuration

A better case for portability

Due to the separation between the GatewayClass and the Gateway, a change of cloud provider or Kubernetes hosting can be resumed by updating the GatewayClassName value. That's it.

This effortless change will allow better portability but also stability between environments. Using different annotations from one stage to another is not rare, even when you only use one cloud provider.

There are already many things to read.

Here is a small list of recommendations if you want to learn more about the Gateway API:

Playlist of videos: The Google team presents a series of video

IstioCon conference: Presented by John Howard from Google, an incredible Software engineer.

Time goes fast, so start experimenting now.

With Kubernetes, clusters are constantly evolving. Most organizations have implemented wave deployment using different versioning for their environment. So it is good to use this methodology to start early testing of the Gateway API. As described during this story, multiple profiles (operators, developers, security team, and others) will be involved, so testing your organization's reaction is as important as the technical results.

It is already available.

We are getting closer to the results, even if it is not GA, so don't wait and start deploying on your clusters. You can still use the Ingress API in clusters at the same time.

The GA is coming shortly, and the technology is already adapting.

Many contributors of the Gateway API are already offering their implementation. The list grows every day, so the choice of early adoption will generate less friction.

The Ingress API is here to stay.

The arrival of this new specification will not ring the bell for the Ingress API. Moreover, no one has announced Ingress deprecation in future releases, and we will see Ingress used in clusters for many versions to come.

What will be your story with the Gateway API?

Let us know your project regarding the Gateway API in the comment section, and if you like it, communicate this story with every Kubernetes Storyteller.